Allow me to blow your mind: A database of unlicensed copies of copyrighted works, even millions of works, used to power a tool that outputs protected material from those works can still be fair use. Indeed, those were exactly the facts in the most important fair use case for AI training: the Google Books case.

You wouldn’t know this from reading the breathless headlines that trumpeted recent research on AI memorization as a “Crisis” and a “Big Copyright Risk.” The pre-print paper, newly published by a team of computer science researchers, demonstrates that under some circumstances, AI models can be induced to output some of the works in their training data. The Atlantic’s “AI Watchdog” Alex Reisner is particularly ecstatic in his write-up about the implications of this research, proclaiming that it proves the models “don’t ‘learn,’ they copy,” and “that could change everything” for the copyright arguments around AI.

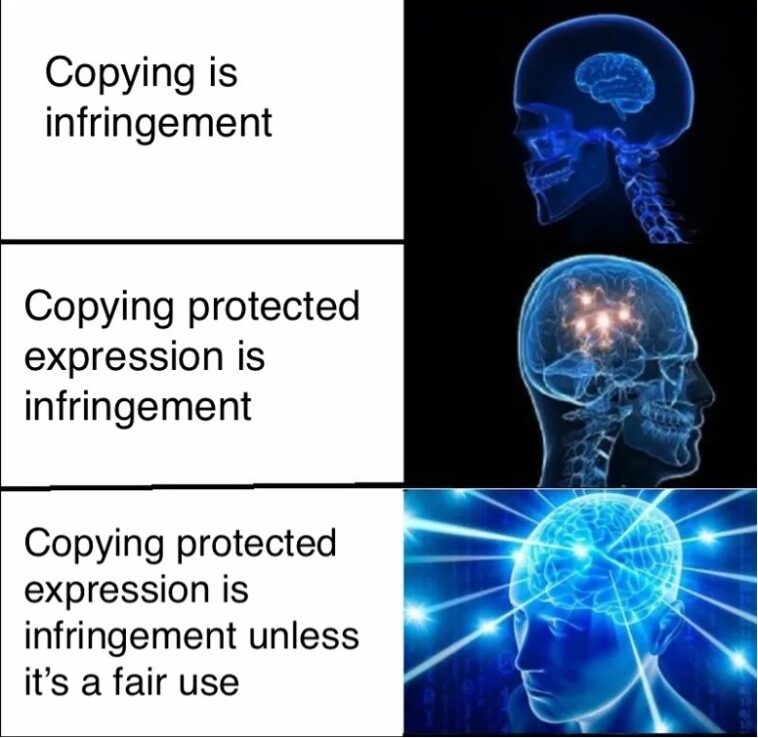

No, not really. No one disputes that AI training involves copying, and that unlicensed AI training is lawful, if it’s lawful, because it is protected by fair use. Copying is a precondition for fair use, not a disqualification. Even the alleged persistence of memorized copies “inside” of an AI model is not in itself cause for alarm, nor is the possibility that users could extract some of this material (if they can figure out how to do an “N-plus jailbreak,” or whatever). The question for fair use isn’t “Does this thing contain copies?” or even “Can this thing spit out copies if it’s manipulated by a team of computer scientists from Yale and Stanford?” The fair use test is “Is the purpose of this thing to spit out copies? And if not, is it easier to get this thing to spit out copies than it would be to buy a copy?” If the purpose is to create something new, and the tool has guardrails that substantially prevent the revelation of copied works to ordinary users, the use is fair.

These are the holdings in the Google Books case, which was about a literal database of millions of digitized books, created and maintained commercially and without a license. Not only that, but every output of the Google Book Search tool was literally a bunch of snippets of protected bits from books in the database. Google Books was everything that alarmists are saying AI might be. It was also fair use.

Copyright is not a gotcha game of proving that some copying happened somewhere, or that someone can somehow use your tool to make a free copy. A determined thief can get a free book by shoplifting from a bookstore or photocopying a library’s copy; that doesn’t make bookstores, libraries, or photocopiers illegal. The driving force in the fair use analysis is not the existence or persistence of copies or the possibility of a bad actor figuring out a way to infringe; it’s the transformative nature of the user’s purpose. As two federal judges have observed, AI is likely to be the most transformative technology any of us sees in our lifetimes, in the technical copyright sense of the word. The sooner we get our heads around this, the easier it will be to resist yellow journalism, moral panics, and pseudo-legal FUD about AI and copyright.